- Published on

Monitoring LLM usage in OpenWebUI

Overview

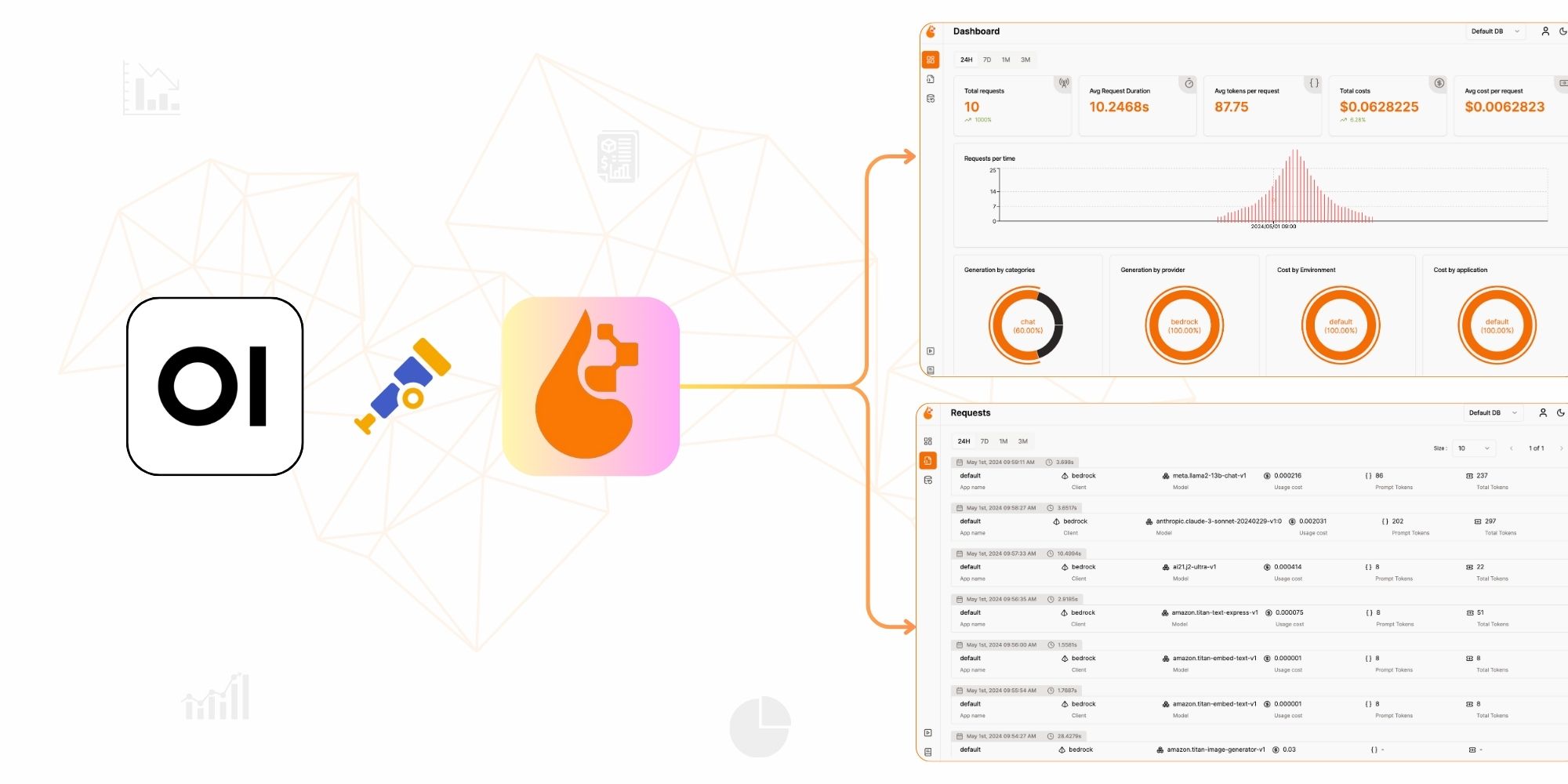

When running inference directly from OpenWebUI, monitoring and observability can be limited. By integrating OpenLIT, we can enhance our ability to track, measure, and optimize LLM usage efficiently. This guide explains how to configure OpenLIT with OpenWebUI using pipelines, ensuring better monitoring through OpenTelemetry (OTEL).

Setting Pipeline

Configuring pipelines

Follow the official OpenWebUI pipeline documentation to set up pipelines.

You can install pipelines via:

Direct Installation: Installation and Setup Guide

Using Docker: Quick Start with Docker

Creating the Pipeline Code

There are multiple example pipeline scripts available in the OpenWebUI GitHub repository.

Below is a sample pipeline script that integrates OpenLIT with OpenWebUI:

"""

title: openlit monitoring pipeline

author: open-webui

date: 2024-05-30

version: 1.0

license: MIT

description: A pipeline for monitoring open-webui with openlit.

requirements: openlit==1.33.8, openai==1.61.1

"""

from typing import List, Union, Generator, Iterator

from schemas import OpenAIChatMessage

from openai import OpenAI

import openlit

class Pipeline:

def __init__(self):

self.name = "Monitoring"

pass

async def on_startup(self):

print(f"on_startup:{__name__}")

# Start openlit collecting metrics

OTEL_ENDPOINT = "http://<my-ip>:4318"

PRICING_JSON = "/path/to/openlit_pricing.json"

openlit.init(

otlp_endpoint=OTEL_ENDPOINT,

pricing_json=PRICING_JSON,

collect_gpu_stats=True

)

pass

async def on_shutdown(self):

print(f"on_shutdown:{__name__}")

pass

def pipe(self, user_message: str, model_id: str, messages: List[dict], body: dict) -> Union[str, Generator, Iterator]:

print(f"pipe:{__name__}")

client = OpenAI(

base_url="http://localhost:8000/v1",

api_key="token-abc123",

)

completion = client.chat.completions.create(

model="TinyLlama/TinyLlama_v1.1",

messages=[

{"role": "user", "content": user_message}

])

print(completion.choices[0].message.content)

return (completion.choices[0].message.content)Configuration Notes

Update OTEL_ENDPOINT and PRICING_JSON paths to match your setup.

The model used is TinyLlama/TinyLlama_v1.1, but you can switch to any model provider supported for monitoring in OpenLIT.

This example uses OpenAI’s API, but you can modify it to use other model provider supported by OpenLIT. Check supported frameworks here.

Importing the Pipeline into OpenWebUI

To import and configure the pipeline within OpenWebUI:

Navigate to Admin Panel > Settings > Connections.

Click the + button to add a new connection.

Set the API URL to

http://localhost:9099orhttp://YOUR-IP:9099.Set the API Key to open-webui.

Once the connection is added and verified, a Pipelines icon will appear next to the API Base URL field.

Your pipeline should now be active!

Running OpenLIT with OpenWebUI

Deploy OpenLIT

Before using OpenLIT for monitoring, you need to deploy it by following Step 1 of the OpenLIT setup guide.

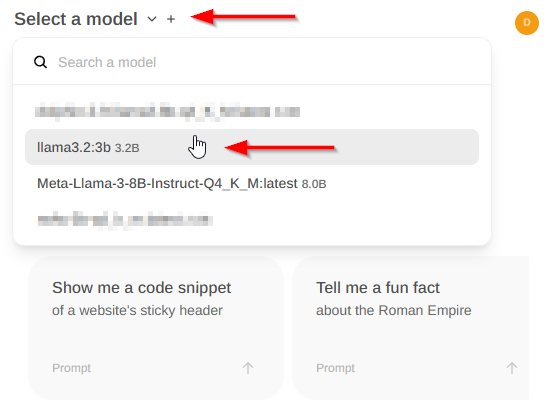

Selecting the Pipeline in OpenWebUI

Once OpenLIT is deployed and your pipeline is active, you can select your pipeline in OpenWebUI by choosing it under the Select a Model section. Example screenshot:

Conclusion & Next Steps

By integrating OpenLIT with OpenWebUI, you gain powerful observability and monitoring capabilities, enabling you to:

Track API usage and performance.

Monitor GPU resource consumption.

Optimize model inference with real-time telemetry.

Next Steps

Explore More Pipelines: Try different pipeline configurations to suit your needs.

Enhance Observability: Customize OpenLIT to collect additional metrics.

Scale Your Deployment: Deploy across multiple instances for better performance tracking.

With this setup, you’re ready to maximize efficiency and monitoring for LLMs in OpenWebUI. 🚀

- Name

- wolfgangsmdt