- Published on

Unlocking Seamless GenAI & LLM Observability with OpenLIT

Introduction:

In the world of GenAI projects, seamless observability is key to unlocking top-notch performance and reliability. Meet OpenLIT, the OpenTelemetry-native GenAI and LLM Application Observability tool that's set to revolutionise the way you monitor and optimize your applications. With just a single line of code, OpenLIT makes integrating observability into your GenAI projects as easy as pie. Let's dive into the world of OpenLIT and explore how it can enhance your application observability.

Understanding LIT: LIT, short for Learning and Inference Tool, is a visual and interactive tool designed to provide insights into AI models and visualize data. Originally introduced by Google, LIT offers a user-friendly interface for understanding the inner workings of AI models and analyzing data effectively.

Features that Make OpenLIT Stand Out

Monitor LLM and VectorDB Performance

OpenLIT automatically generates traces and metrics, giving insights into your application’s performance and costs. You can track performance in different environments and optimize resource use efficiently.

Cost Tracking for Custom and Fine-Tuned Models

You can customize and track costs for specific AI models using OpenLIT’s custom JSON file. This helps in precise budgeting and aligning costs with your project needs.

OpenTelemetry-native SDKs

OpenLIT supports OpenTelemetry natively, ensuring smooth integration without added complexity. Being vendor-neutral, it easily fits into your existing AI stack.

OTel Auto Instrumentation Capabilities OpenLIT complies to the OpenTelemetry community’s Semantic Conventions and is updated on a regular basis to maintain alignment with the community. OpenLIT offers insights to improve the performance and stability of LLM applications, whether using popular hosted LLMs like OpenAI and HuggingFace, self-hosted LLMs like Ollama or GPT4All, or vector databases like ChromaDB and Pinecone.

Here is the list of supported GenAI and LLM libraries :

LLMs

OpenAI

Ollama

Cohere

Anthropic

GPT4All

Azure OpenAI

Mistral

HuggingFace

Amazon Bedrock

Vertex AI

Groq

Vector DBs

ChromaDB

Pinecone

Qdrant

Milvus

Frameworks

LangChain

LiteLLM

LlamaIndex

Haystack

EmbedChain

Here’s is the list of support for connection to other observability platforms:

Prometheus

Otel Collector

Grafana

Jaeger

New Relic

Datadog

Signoz

Dynatrace

OpenObserve

Highlight.io

Sleek and Intuitive Design

OpenLIT UI offers a dashboard with a clean interface based on Next.js and Clickhouse, providing quick and responsive performance. The interface is deliberately arranged based on feedback from numerous AI Developers, making it simple to navigate and locate the information you require quickly.

Overall Usage

Shows total requests, request duration, token usage, cost analysis, and more using real-time and historical data.

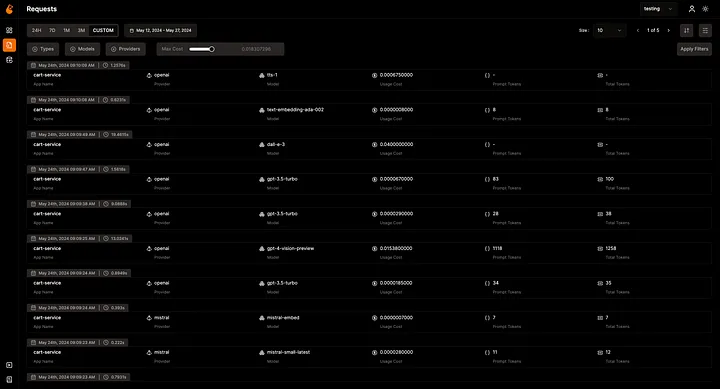

Requests

Provides an integrated view of traces for tracking individual requests. You can also apply dynamic filters to customize your view or sort the results based on costs, tokens etc.

Database config

Allows you to add and switch between multiple ClickHouse databases, making it convenient to manage different contexts with the same interface.

Join us on this exciting voyage to reshape the future of AI observability! 🚀 Share your thoughts, suggest cool features, and dive into awesome contributions. Let’s make AI observability open and better together! 🚀

- Name

- Aman Agarwal

- @_typeofnull